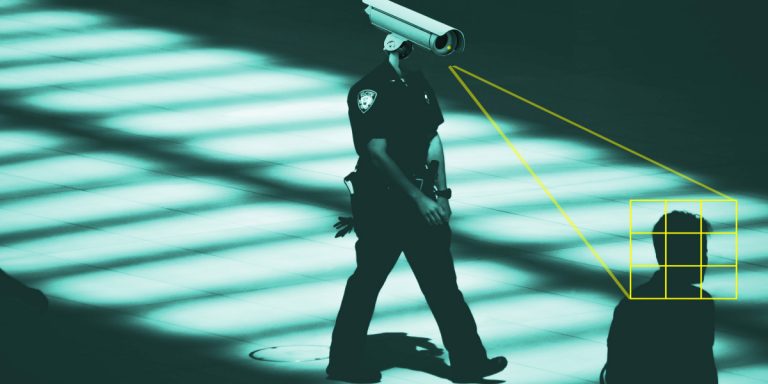

When information broke that a mistaken match from a face recognition system had led Detroit police to arrest Robert Williams for a crime he didn’t commit, it was late June, and the nation was already in upheaval over the dying of George Floyd a month earlier. Quickly after, it emerged that yet one more Black man, Michael Oliver, was arrested underneath comparable circumstances as Williams. Whereas a lot of the US continues to cry out for racial justice, a quieter dialog is taking form about face recognition expertise and the police. We’d do nicely to pay attention.

When Jennifer Robust and I began reporting on the use of face recognition expertise by police for our new podcast, “In Machines We Belief,” we knew these AI-powered techniques had been being adopted by cops throughout the US and in different nations. However we had no thought how a lot was happening out of the public eye.

For starters, we don’t know the way usually police departments in the US use facial recognition for the easy motive that in most jurisdictions, they don’t should report after they use it to determine a suspect in a crime. The latest numbers are speculative and from 2016, however they counsel that at the time, at the least half of Individuals had photographs in a face recognition system. One county in Florida ran 8,000 searches every month.

We additionally don’t know which police departments have facial recognition expertise, as a result of it’s frequent for police to obscure their procurement course of. There is proof, for instance, that many departments purchase their expertise utilizing federal grants or nonprofit items, that are exempt from sure disclosure legal guidelines. In different circumstances, corporations supply police trial durations for his or her software program that permit officers to make use of techniques with none official approval or oversight. This permits corporations that make face recognition techniques to assert their merchandise are in vast use—and give the outward impression they’re each common and dependable crime-solving instruments.

Protected algorithms that don’t serve

But when facial recognition is recognized for something, it’s how unreliable it is. As we report in the present, in January London’s Metropolitan Police debuted a dwell facial recognition system that in exams had an accuracy price of lower than 20%. In New York Metropolis, the Metro Transit Authority trialed a system on main thoroughfares with a reported price of 0% accuracy. The techniques are sometimes racially biased as nicely—one examine discovered that in some business techniques, even in lab situations error charges in figuring out darker skinned girls had been round 35%. Whereas reporting for the present, we discovered that it’s not unusual for police to change photographs to enhance their possibilities of discovering a match. Some even defended the apply as crucial to doing good police work.

Two of the most controversial and superior corporations in the discipline, ClearviewAI and NTechLabs, declare to have solved the “bias downside” and reached near-perfect accuracy. ClearviewAI asserts that it’s utilized by round 600 police departments in the US (some specialists we spoke to had been skeptical of that determine). NTechLabs, based mostly in Russia, has signed on for dwell video facial recognition all through the metropolis of Moscow.

However there is nearly no strategy to independently confirm their claims. Each corporations have algorithms that sit on databases of billions of public photographs. The Nationwide Institute of Requirements and Expertise (NIST), in the meantime, gives one of the few impartial audits of face recognition expertise. The NIST Vendor Take a look at makes use of a a lot smaller dataset, which together with the high quality and range of the photos in the database, limits its energy as an auditing device. ClearviewAI has not taken NIST’s most up-to-date check. NTechLabs has taken the static picture check and carried out nicely, however there is no at present used check for dwell video facial recognition. There is additionally no impartial check particularly for bias.

Recognition in the streets

The latest wave of Black Lives Matter protests, sparked by Floyd’s dying, have known as into query a lot of what we’ve accepted about trendy policing, together with their use of expertise. The darkish irony is that, when folks take to the streets to protest racism in policing, some police have used cutting-edge instruments with a recognized racial bias in opposition to these assembled. We all know, for instance, that the Baltimore police division used face id on protestors after the dying of Freddie Grey in 2015. And we all know that a handful of departments have put out public requires footage of this yr’s protests. It’s been documented that police in Minneapolis have entry to a vary of tech, together with ClearviewAI’s companies. Based on Jameson Spivack of the Middle on Privateness and Expertise at Georgetown College, who we interview in the present, if face recognition is used on BLM protests, it’s “concentrating on and discouraging Black political speech particularly.”

After years of wrestle for regulation, by largely Black and brown-led organizations, we have by no means been at a higher second to actually change. Microsoft, Amazon and IBM have all introduced discontinuations or moratoriums of their face recognition merchandise. In the previous a number of months, a handful of main US cities have introduced bans or moratoriums on the expertise. On the different hand, the expertise is shifting quickly. The techniques’ capabilities—in addition to potential for misuse and abuse—will proceed to develop by leaps and bounds. We’re already beginning to see police departments and expertise suppliers transfer past static, retrospective face recognition techniques to dwell video analytics which can be built-in with different varieties of information streams like audio gunshot surveillance techniques.

Some of the law enforcement officials we spoke to stated they shouldn’t be left with archaic instruments to struggle crime in the 21st century. And it’s true that in some circumstances, expertise could make policing much less violent and much less vulnerable to human biases.

However after months of reporting out our audio miniseries, I used to be left with a feeling of foreboding. The stakes are rising by the day, and thus far the public has been left far behind in its understanding of what is going on on. It’s not clear how that’s going to vary except all folks on all sides of this difficulty can agree that everybody has a proper to be told.